Why bother with energy modelling of buildings? It’s a simple question and on one level some simple answers, but I think it’s worth stepping back to from time to time, to keep things in perspective. The usual answers are something like: to test design ideas without having to build them; to help understand how a building will work but ultimately to make better buildings. Oh, and to comply with building regulations.

Sadly, the last part seems to be the only reason some models get done, and the criteria we set is ‘what is the minimum we have to do not to fail’ rather than ‘what’s the best we can feasibly achieve’, but I do not want to pursue that well-worn topic here. Before we go any further, it should be highlighted that the words ‘model’ and ‘simulation’ have specific meanings but I will abuse them here for the sake of prose. I will use ‘model’ and ‘modelling’ in a general sense to include the act of simulation, and hope the context makes my meaning clear in each case.

Assuming the intention is to deliver excellent buildings, I would choose to frame the problem as creating a ‘Good Enough’ model. This is an extension of the idea encapsulated by statistician George Box in the quote “All models are wrong; some models are useful”. The phrase ‘A Good Enough Parent’ was coined by Donald Winnicott and is short hand for a child developmental theory that suggests ‘manageable failure’ on the part of parent with respect to their children is not just normal but positive; it prepares children for separation and life in the real world by breaking down the illusion of omnipotence and withdrawing support in a constructive way. The idea can be applied to energy modelling of buildings, but I would be the first to admit, in a slightly oblique manner.

If we turn to the first part of Mr Box’s statement, it is useful to consider the plethora of ways in which typical building models are wrong. Usually (not always), we adopt weather files that represent likely or typical conditions, which will inevitably vary from reality. The same can be said of occupant behaviour which can be even more difficult to predict, or more specifically, locate in a domain of risk as opposed to uncertainty (more on this later). In both cases, statistical approaches are often adopted such as setting up ‘average’ criteria, which will seldom if ever match reality. Many models do not adequately account for thermal bridging (a particular bug-bear of mine); the physical characteristics of the materials in the model can vary from reality due to the differences of laboratory conditions from reality, perhaps even misleading performance data, or something as simple as a different material being used.

These are just some of the more obvious examples. How well do we understand the impact of these vagaries on the answer we yield from our model? Maybe not as well as we like to think, as Bath University’s paper which I commented on last year suggests. In order to embrace the second part of the Box quote and render useful models, it’s critical to understand why, how and to what extent the assumptions built into the model, often at a deep level, are wrong. Some models make this easier than others – would you rather have a Black Box or a George Box?

One could therefore argue that a simpler model, which is perhaps a little more wrong, can be more useful. It is failing in manageable ways, in ways we can understand and therefore take into account when making design decisions; it is a good enough model. I sometimes express this as ‘human scale’ models – where the maths and physics can be understood in a reasonable amount of time by a competent designer. I would acknowledge that this lays me open to accusations of laziness – as an engineer I should make the time and be comfortable enough with the maths and physics, to understand them. A more charitable explanation is that Good Enough Models make business sense. I have already spent many hours of my life learning how complex models work, gathering experience with then, adjusting inputs, waiting for simulations to run, re-running them when I make a tiny mistake, and post-processing results. If a simpler model cuts down on this detailed, time consuming work, for only a modest loss of accuracy, I can answer my client’s questions faster.

When we make a model, there are three separate things going on. First, obviously, is the mathematical representation within a computer (or perhaps just in a note book and calculator); secondly there is the real thing, often occurring in the future, which the computer model represents. Finally, there is a model in our mind, the one we compare the computer to if we get unexpected results. The model in our mind is in effect our understanding of maths and physics combined with our experience. There are therefore at least two degrees of separation between the real world and our design decisions. I hypothesise that this is one reason for the surprising conclusions in the Bath University work. When we are using models that we do not fully understand, we tend to fall back on our understanding of the real world as a proxy for the model. For example, one often hears energy modelers make suggestions to ‘open the windows further, or for longer’, as opposed to ‘we could assign a larger opening proportion or assume longer schedule to the Euclidean plane that represents the window’. This is a banal example, but I believe the turn of phrases such as these, belies the nature of the mental model. This falling back on experienced reality as a proxy for the model is arse-about-face.

The opening question ‘why do we model?’ is rather simplistic and general; in practise we model to answer questions about our design such as ‘how much insulation should there be?’.

When modelling and presenting and interpreting results, it is vital to deal in terms of risk and uncertainty, and to be clear about what each of those things mean. Nassim Taleb (the friendly but elusive statistician-philosopher-trader) takes the Rumsfeldian concept of known-unknowns and labels them risk, and unknown-unknows, and labels then uncertainties. We can evaluate risk (the building will be 10m long +/- 100mm), but we are uncertain as to whether the building will be struck by lightning and burn down before it is complete. Actuaries reading this will take issue that the latter risk can indeed be quantified (and therefore insured), and this in fact illustrates the point. With appropriate models, we can transpose factors from the domain of uncertainty into that of risk; this is why we do it. To mangle Martin Holliday’s concept, we can have a ‘probably-good house’ by adopting sensible U-values, paying attention to detailing, airtightness MVHR, and getting good windows. Modelling won’t guarantee the energy consumption, but it can place it somewhere in a realm of greater probability and crucially help us make better design decisions.

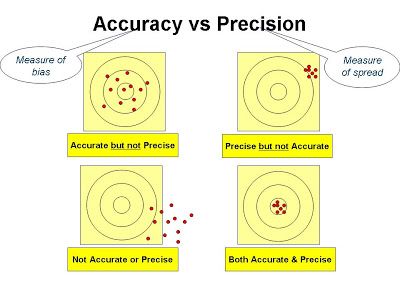

The old comparison between precision and accuracy is also very relevant here. The diagram here represents the idea from the perspective of an archer. The precise archer can align his sights with the target very reliably; the accurate archer makes sure his sights are well-calibrated. Needless to say, the best archers adjust their sights and can shoot repeatably. We can therefore be very precise, but still wrong. In fact we’ll be more consistently wrong than if we are precise but inaccurate. As energy modellers we should therefore value accuracy over precision, and model accordingly. Does it make sense to spend time working out the varying hourly internal gains for every different room type, or is it good enough to assume a global, static average? That probably depends on the question or problem to be solved, the stage of design development and so on, but I would advocate more ‘quick and dirty’ modelling whenever that is Good Enough. This means selecting the right tools for the question and the moment, and also using them wisely.

Ultimately it makes good business sense; a simpler model may often be faster to create and interpret, and it is likely to make you more aware of risks that more sophisticated techniques may mask.

So, energy modelers; next time you embark on a project, pause for a moment and think: Is what I’m about to do necessary and sufficient, is it the fastest way of earning my fee, and is it the best way of de-risking? And any clients, and other design team members reading this: are you asking the right questions of your energy people, and are they giving you answers that are precise or accurate? Are they expressing the risks and uncertainties? Is the modelling Good Enough?